The title says it all! Featured in UCD CS News, May 2024, but the full article is here. That’s what you’ll probably want to read 🙂

The title says it all! Featured in UCD CS News, May 2024, but the full article is here. That’s what you’ll probably want to read 🙂

I had so much fun presenting the closing ITiCSE 2023 keynote “Chat Overflow: Artificially Intelligent Models for Computing Education – renAIssance or apocAlypse?” with Paul Denny, Juho Leinonen and James Prather. Rather than ramble on here, I’ll just provide links to the video and slides 🙂 Thanks to Mikko-Jussi Laakso and the ITiCSE 2023 team for inviting us!

In 2006 Renzo Davoli chaired the ACM SIGCSE Innovation and Technology in Computer Science Education Conference (ITiCSE 2006) in Bologna Italy. Renzo purchased a brass bell (pictured below). At the conference he would walk through the coffee break area ringing the bell encouraging folks to return to sessions. The bell then went “missing” but it is believed that it sat in Bologna for over a decade until ITiCSE 2017 which conveniently for the bell, was also in Bologna, chaired by Renzo and Mikey Goldweber. The bell then began a new life of travel. It was given to Irene Polycarpou and Janet Reed who chaired ITiCSE 2018 in Larnaca, Cyprus. The bell then traveled by itself via post to Aberdeen Scotland for ITiCSE 2019 where Bruce Scharlau and Roger McDermott rang the bell.

Then the bell, aided by a pandemic, sat again for years. In 2022 the bell traveled all on its own by post to Dublin, Ireland where myself and Keith Quille spent some time ringing the bell at ITiCSE 2022. It then sat in Dublin on my desk for a year until this week when – the bell was happy to hear – was escorted by hand on a Finnair flight to Turku, Finland. This morning I handed it to Mikko-Jussi Laakso who just a few minutes ago rang the bell to open ITiCSE 2023. The bell will later this week travel – accompanied again – this time by Mattia Monga, to its ancestral home of Italy where Mattia will, in one year, ring the bell for ITiCSE 2024 in Milan.

Where the bell will go from there is not yet known. However, given that ITiCSE 2023 is the first physical attendance only conference since 2019 and has around 250 people in attendance, the bell has a long healthy future of European travel ahead at many future ACM ITiCSE conferences.

Thanks to Mikey Goldweber and Bruce Scharlau for helping me piece together the history of the ITiCSE bell which I am happy to pass on to the community.

Joyce Mahon, a Computer Science PhD student at University College Dublin supervised by Dr. Brett Becker and Dr. Brian Mac Namee has put ChatGPT to the test: the Leaving Certificate Computer Science (LCCS) – Higher Level no less!

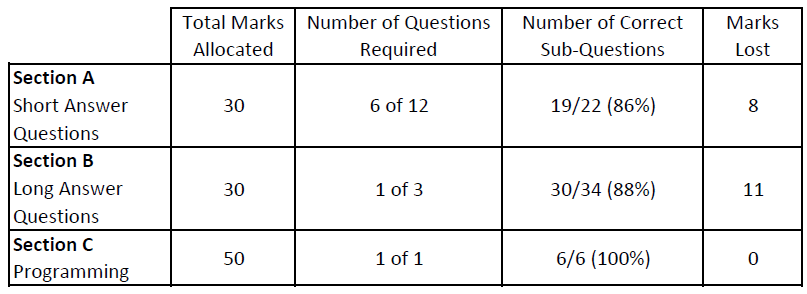

In short: yes it can, answering nearly all questions correctly. Computer Science at Leaving Certificate level consists of two assessment components: an end of course examination (70%) and coursework assessment (30%). We used the 2021 LCCS Higher-level examination which comprised of 16 questions in three sections (A, B and C), not all of which are mandatory. These questions are broken down into a total of 62 sub-questions. We extracted the text from the 62 sub-questions in the 2021 LCCS exam and presented these to ChatGPT. No extra information was provided and each sub-question was presented in a fresh chat session. We then corrected the answers received from ChatGPT using the official Leaving Certificate marking scheme. As we expected, the majority of these (55/62) were answered correctly.

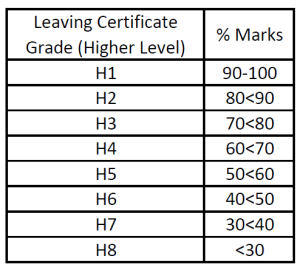

There is an element of choice in this examination. If only ChatGPT’s best answers are counted, it would receive very close to (or possibly exactly) 100 percent, depending on the marker and their interpretation of the marking criteria. Of course, this means that ChatGPT would receive a H1 – the highest grade possible (in this particular exam – higher level, 2021). As the examination comprises 70% of the overall grade for this subject, a grade of H3 could potentially be achieved even before considering the Leaving Certificate coursework component worth 30% (see table below).

Generative AI models such as ChatGPT are not infallible. Does this mean that they should be avoided? We are taught early on to not believe everything that we read. Perhaps today’s students should also be taught to not believe everything a large language model provides. Similarly, in important situations, people should always double check things. Carpenters measure twice so they cut (successfully) once. Students in traditional exams are encouraged to double-check their answers. The same applies when using ChatGPT. If anything, this is a good lesson for students to learn.

Besides, the performance of LLMs on certain tasks (such as the one here) is impressive, and they are constantly improving. In our experiment many solutions contained more detail than the marking scheme prescribes. These models also have other benefits. For instance they can provide students (and educators) with unlimited examples, alternative approaches, and help with “blank page syndrome”. These models can even mark their own answers, although we have not tested this rigorously yet.

In a word: no. The Leaving Certificate is an invigilated exam, and was invigilated long before Generative AI, and for good reason. The advent of this technology is just another (albeit fast and large) step in the same direction humans have been going in for ages. In addition, the LCCS features 30% for coursework assessment which is overseen by their teacher. While ChatGPT could be used for this, the nature of this project work is much more involved than the questions found on a paper exam. It is likely that technology is a little bit off from being able to do as well on this coursework as it does on the paper exam.

No, ChatGPT has been shown to be capable of generating acceptable answers for multiple exam types in various subjects. Recently ChatGPT passed a Higher Level English Leaving Certificate exam with ease (77%) and OpenAI has simulated test runs of various professional and academic exams.

ChatGPT provides a rapid response and a personalized learning experience where students can actively engage with material. It is adaptable and accessible, and can provide customized feedback, real time clarification and multilingual support. Its versatility as an educational tool is evident across diverse educational settings.

Generative AI technology promises to change the future of programming but exactly how remains to be seen. Given that 30% of the LCCS requires students to write and manipulate code directly, this not only could change the nature of programming examinations like the LCCS but fundamentally change how people program computers in general and therefore how programming is taught and learned. Codex, a Generative AI in the GPT family superseded by GhatGPT has been shown in research studies to perform above the 75th percentile of real university students in programming courses (see here and here).

Programming can be mentally challenging, and progress – especially when learning – can seem slow. Generative AI can mitigate these challenges. In fact professional programmers using GitHub Copilot – powered by ChatGPT – cite speed, programmer satisfaction, and the conservation of mental energy amongst the biggest reasons they use it.

The nature of programming may also change from programmers directly entering line after line of code character by character on a keyboard, to entering prompts into a Generative AI model like ChatGPT. Devising prompts that get the desired results is known as prompt engineering and involves typing program descriptions in natural language like English rather than typing code in a programming language. Prompt Engineering is emerging as a new profession with some salaries in the six-figure range, and Prompt Engineering is already being taught to students. Will prompt engineering become the new programming? At some point in the future will prompt engineering be a large part of learning programming and therefore a large part of programming exams? At this point, only time will tell.

Joyce Mahon is a Computer Science PhD student at UCD and part of the Science Foundation Ireland Centre for Research Training in Machine Learning (www.ml-labs.ie) supervised by Dr. Brett Becker and Dr. Brian Mac Namee.

Dr. Brian Mac Namee is an Associate Professor at the UCD School of Computer Science, director of the Science Foundation Ireland Centre for Research Training in Machine Learning (www.ml-labs.ie) and co-author of the textbook “Fundamentals of Machine Learning for Predictive Data Analytics” (https://machinelearningbook.com/).

Dr. Brett Becker (www.brettbecker.com) is an Assistant Professor in the UCD School of Computer Science and Dr. Keith Quille (www.keithquille.com) is a Senior Lecturer at Technological University Dublin (www.brettbecker.com, www.keithquille.com). They are the authors of the textbook “Computer Science for Leaving Certificate” (https://goldenkey.ie/computer-science-for-leaving-cert/).

The model used in this work was ChatGPT Plus (GPT-4: May 3, 2023 version).

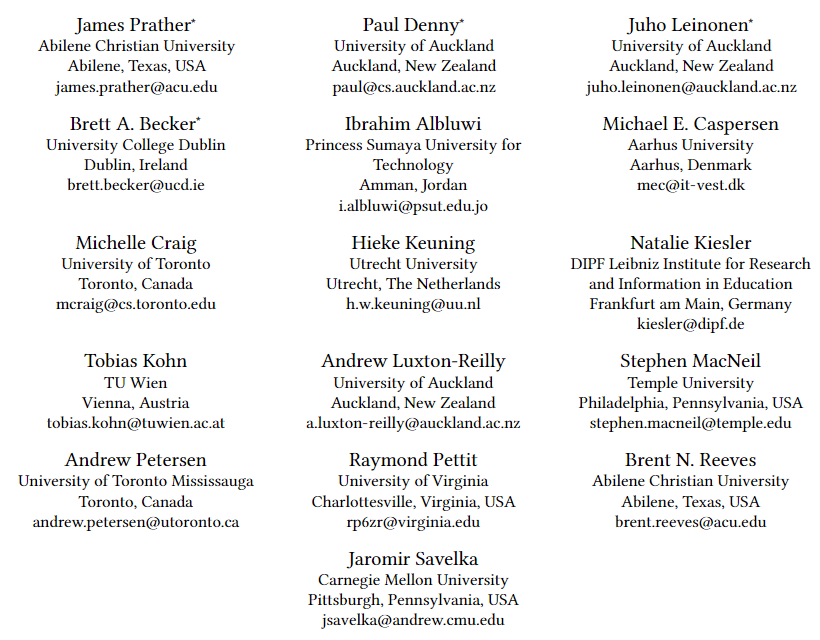

I’m thrilled to be a co-leader of Working Group 4 at ITiCSE 2023 this year: Transformed by Transformers: Navigating the AI Coding Revolution for CS Education.

Really looking forward to how this develops! Here’s a screenshot of the Brady Bunch:

We have been meeting weekly for over a month and amazingly, despite the timezone differences spanning several continents from North America to Australasia, we have 14-16 (of 16) on the call every week – amazing.

Abstract:

The recent advent of highly accurate and scalable large language models (LLMs) has taken the world by storm. From art to essays to computer code, LLMs are producing novel content that until recently was thought only humans could produce. Recent work in computing education has sought to understand the capabilities of LLMs for solving tasks such as writing code, explaining code, creating novel coding assignments, interpreting programming error messages, and more. However, these technologies continue to evolve at an astonishing rate leaving educators little time to adapt. This working group seeks to document the state-of-the-art for code generation LLMs, detail current opportunities and challenges related to their use, and present actionable approaches to integrating them into computing curricula.

This week I am co-organizing Dagstuhl Seminar 22442 – Toward Scientific Evidence Standards in Empirical Computer Science. We’re thrilled that 22 people made it from as far away as Australia, Canada, and the US along with colleagues from Finland, Germany, Ireland, Scotland and Switzerland. This post will be updated in several months when the report for this seminar is published.

Brett A. Becker (University College Dublin, IE) [dblp]

Christopher D. Hundhausen (Oregon State University – Corvallis, US) [dblp]

Ciera Jaspan (Google – Mountain View, US) [dblp]

Andreas Stefik (University of Nevada – Las Vegas, US) [dblp]

Thomas Zimmermann (Microsoft Corporation – Redmond, US)

Registration for ITiCSE 2022 is now open at iticse.acm.org/2022 and Early-Bird registration closes May 31 so now is the time to register!

This year ITiCSE is being held in Dublin, Ireland at University College Dublin.

Conference dates are July 11th to July 13th, 2022. There is a reception on Sunday evening, July 10, along with the traditional ITiCSE excursion and banquet on Tuesday afternoon and evening.

Held annually in Europe, the ACM SIGCSE conference on Innovation and Technology in Computer Science Education is the second-oldest and second-largest computer science education conference.

SIGCSE members will have noticed that they received an email on April 4 asking them to vote for the new SIGCSE board that will serve from 2022-2025. That means it’s time to get out and Vote! If you can’t find the email, search for subject “ACM SIG 2022 Election”.

Special thanks are due to the current SIGCSE Board for their dedication and leadership through what may have been the most challenging term that any board has faced. SIGCSE made it through what the pandemic has thrown at us so far in admirable fashion despite numerous – and often 11th hour – setbacks. I’m glad that Portland seems now so long ago!

The 2022 election slate is listed below (also available here). I’m thrilled to be running for Vice Chair, currently held by Dan Garcia. My good colleague Paul Denny is also running for Vice-Chair. Unfortunately that means that no matter what the election outcome is, we won’t be serving together 🙁

Special thanks to Amber Settle, SIGCSE immediate past chair and SIGCSE 2022 election committee chair, and the 2022 SIGCSE Election Committee:

Rock the Vote!

ITiCSE 2021, the 26th annual conference on Innovation and Technology in Computer Science Education, is being held virtually in Paderborn, Germany. The main conference is June 28 – July 1. More information and registration is on the website https://ITiCSE.acm.org.

The term of the current Editor-in-Chief (EiC) of the ACM Transactions on Computing Education (TOCE) (https://dl.acm.org/journal/toce) is coming to an end, and the ACM Publications Board has set up a nominating committee to assist the Board in selecting the next EiC. TOCE was established in 2001 and is a premier journal for computing education, publishing over 30 papers annually.

Nominations, including self-nominations, are invited for a three-year term as TOCE EiC, beginning on September 1, 2021. The EiC appointment may be renewed at most one time. This is an entirely voluntary position, but ACM will provide appropriate administrative support.

Appointed by the ACM Publications Board, Editors-in-Chief (EiCs) of ACM journals are delegated full responsibility for the editorial management of the journal consistent with the journal’s charter and general ACM policies. The Board relies on EiCs to ensure that the content of the journal is of high quality and that the editorial review process is both timely and fair. He/she has the final say on acceptance of papers, size of the Editorial Board, and appointment of Associate Editors. A complete list of responsibilities is found in the ACM Volunteer Editors Position Descriptions (http://www.acm.org/publications/policies/position_descriptions).

Additional information can be found in the following documents:

Nominations should include a vita along with a brief statement of why the nominee should be considered. Self-nominations are encouraged. Nominations should include a statement of the candidate’s vision for the future development of TOCE. The deadline for submitting nominations is July 21, 2021, although nominations will continue to be accepted until the position is filled.

Please send all nominations to the nominating committee chairs, Mark Guzdial (mjguz AT umich DOT edu) and Betsy DiSalvo (bdisalvo AT cc.gatech DOT edu).

The search committee members are:

Louiqa Raschid (University of Maryland) will serve as the ACM Publications Board Liaison