Joyce Mahon, a Computer Science PhD student at University College Dublin supervised by Dr. Brett Becker and Dr. Brian Mac Namee has put ChatGPT to the test: the Leaving Certificate Computer Science (LCCS) – Higher Level no less!

Can ChatGPT Plus successfully pass the Higher LCCS examination?

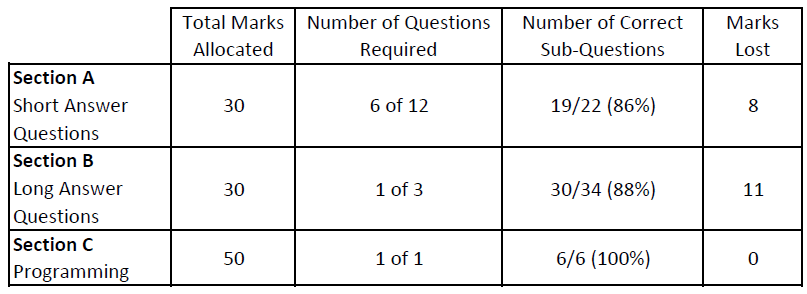

In short: yes it can, answering nearly all questions correctly. Computer Science at Leaving Certificate level consists of two assessment components: an end of course examination (70%) and coursework assessment (30%). We used the 2021 LCCS Higher-level examination which comprised of 16 questions in three sections (A, B and C), not all of which are mandatory. These questions are broken down into a total of 62 sub-questions. We extracted the text from the 62 sub-questions in the 2021 LCCS exam and presented these to ChatGPT. No extra information was provided and each sub-question was presented in a fresh chat session. We then corrected the answers received from ChatGPT using the official Leaving Certificate marking scheme. As we expected, the majority of these (55/62) were answered correctly.

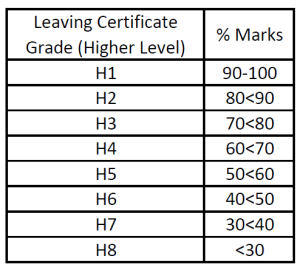

There is an element of choice in this examination. If only ChatGPT’s best answers are counted, it would receive very close to (or possibly exactly) 100 percent, depending on the marker and their interpretation of the marking criteria. Of course, this means that ChatGPT would receive a H1 – the highest grade possible (in this particular exam – higher level, 2021). As the examination comprises 70% of the overall grade for this subject, a grade of H3 could potentially be achieved even before considering the Leaving Certificate coursework component worth 30% (see table below).

ChatGPT provides incorrect answers to some questions. Should we discard it as an educational tool?

Generative AI models such as ChatGPT are not infallible. Does this mean that they should be avoided? We are taught early on to not believe everything that we read. Perhaps today’s students should also be taught to not believe everything a large language model provides. Similarly, in important situations, people should always double check things. Carpenters measure twice so they cut (successfully) once. Students in traditional exams are encouraged to double-check their answers. The same applies when using ChatGPT. If anything, this is a good lesson for students to learn.

Besides, the performance of LLMs on certain tasks (such as the one here) is impressive, and they are constantly improving. In our experiment many solutions contained more detail than the marking scheme prescribes. These models also have other benefits. For instance they can provide students (and educators) with unlimited examples, alternative approaches, and help with “blank page syndrome”. These models can even mark their own answers, although we have not tested this rigorously yet.

Does the fact that ChatGPT can ace the LCCS diminish the integrity of the exam?

In a word: no. The Leaving Certificate is an invigilated exam, and was invigilated long before Generative AI, and for good reason. The advent of this technology is just another (albeit fast and large) step in the same direction humans have been going in for ages. In addition, the LCCS features 30% for coursework assessment which is overseen by their teacher. While ChatGPT could be used for this, the nature of this project work is much more involved than the questions found on a paper exam. It is likely that technology is a little bit off from being able to do as well on this coursework as it does on the paper exam.

Is ChatGPT only useful for LCCS?

No, ChatGPT has been shown to be capable of generating acceptable answers for multiple exam types in various subjects. Recently ChatGPT passed a Higher Level English Leaving Certificate exam with ease (77%) and OpenAI has simulated test runs of various professional and academic exams.

ChatGPT provides a rapid response and a personalized learning experience where students can actively engage with material. It is adaptable and accessible, and can provide customized feedback, real time clarification and multilingual support. Its versatility as an educational tool is evident across diverse educational settings.

What Will the Future of Coding and Programming Exams Look Like?

Generative AI technology promises to change the future of programming but exactly how remains to be seen. Given that 30% of the LCCS requires students to write and manipulate code directly, this not only could change the nature of programming examinations like the LCCS but fundamentally change how people program computers in general and therefore how programming is taught and learned. Codex, a Generative AI in the GPT family superseded by GhatGPT has been shown in research studies to perform above the 75th percentile of real university students in programming courses (see here and here).

Programming can be mentally challenging, and progress – especially when learning – can seem slow. Generative AI can mitigate these challenges. In fact professional programmers using GitHub Copilot – powered by ChatGPT – cite speed, programmer satisfaction, and the conservation of mental energy amongst the biggest reasons they use it.

The nature of programming may also change from programmers directly entering line after line of code character by character on a keyboard, to entering prompts into a Generative AI model like ChatGPT. Devising prompts that get the desired results is known as prompt engineering and involves typing program descriptions in natural language like English rather than typing code in a programming language. Prompt Engineering is emerging as a new profession with some salaries in the six-figure range, and Prompt Engineering is already being taught to students. Will prompt engineering become the new programming? At some point in the future will prompt engineering be a large part of learning programming and therefore a large part of programming exams? At this point, only time will tell.

About the Authors

Joyce Mahon is a Computer Science PhD student at UCD and part of the Science Foundation Ireland Centre for Research Training in Machine Learning (www.ml-labs.ie) supervised by Dr. Brett Becker and Dr. Brian Mac Namee.

Dr. Brian Mac Namee is an Associate Professor at the UCD School of Computer Science, director of the Science Foundation Ireland Centre for Research Training in Machine Learning (www.ml-labs.ie) and co-author of the textbook “Fundamentals of Machine Learning for Predictive Data Analytics” (https://machinelearningbook.com/).

Dr. Brett Becker (www.brettbecker.com) is an Assistant Professor in the UCD School of Computer Science and Dr. Keith Quille (www.keithquille.com) is a Senior Lecturer at Technological University Dublin (www.brettbecker.com, www.keithquille.com). They are the authors of the textbook “Computer Science for Leaving Certificate” (https://goldenkey.ie/computer-science-for-leaving-cert/).

Notes

The model used in this work was ChatGPT Plus (GPT-4: May 3, 2023 version).